Learn how Zscaler uses AI for prod to get to RCA for 150K alerts in minutes

AI is transforming software engineering. You can code a new payment service in minutes with AI-assisted coding. But code is only one of the tens of tools engineers use to deliver value in production. The real bottleneck is not writing code. It is thinking holistically about the context from the full software development lifecycle that includes writing, but also safely releasing it, efficiently operating it, troubleshooting it when things break, and feeding those learnings back into better code. It is only a matter of time before AI helps to tighten and automate this loop between code and production.

That context shows up everywhere: change rollouts, on-call readiness, cost optimization, compliance, telemetry design, and even feature planning. Yet these workflows are fragmented across tools and conventions. When things break, engineers jump between tools like Datadog and CloudWatch for metrics, Splunk or Loki for logs, feature flags, deployments, and infrastructure, and code, piecing together a mental model by hand.

It is an unfortunate truth for most organizations that engineers can spend upwards of 74 percent of their time outside of coding, with the majority of this time spent on operational and background work that consumes engineering capacity.¹

To be a truly AI-first software engineering organization, businesses need to extend intelligence into every part of production. Safely releasing changes, efficiently operating systems, troubleshooting incidents in real time, and learning from each failure are just as crucial as writing the code itself. This is where the next frontier lies. At Resolve AI, we are pioneering the use of AI in production engineering, building systems that intuitively understand your environment, collaborate with your teams, and close the loop between code and operations.

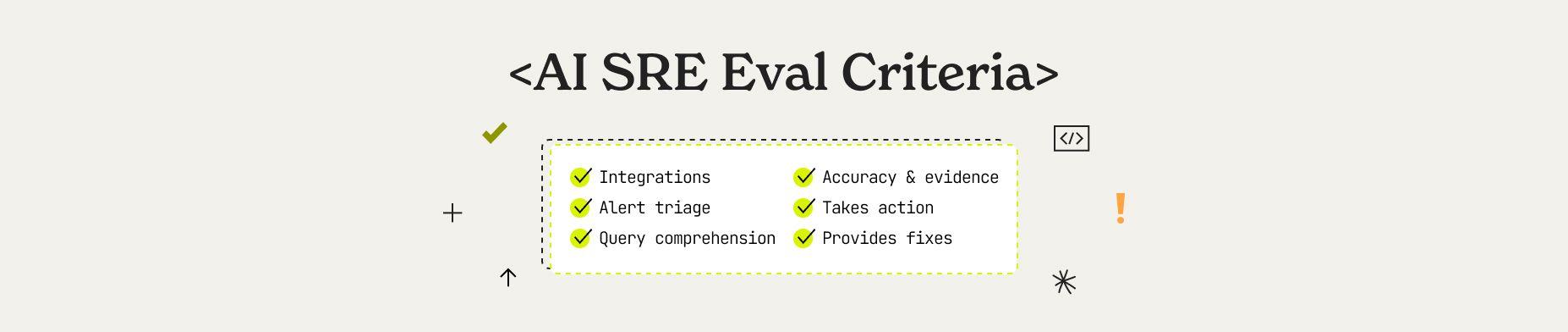

In this blog, you will learn how to evaluate agentic AI to automate SRE and production engineering:

Technology under the hood matters as much as outcomes. A production-grade system for an AI SRE is not a search engine, a chatbot, or a script runner. It should combine five core capabilities, each critical on its own and extremely powerful together:

Incomplete approaches cannot check these boxes. Retrieval-based or summarization systems may make data easier to browse, but they cannot reason across incidents, find causal chains, or adapt when their first hypothesis is wrong. Many in-house projects and commercial offerings fall into this camp. Similarly, automation-first approaches that execute pre-curated runbooks or scripts can be helpful for repetitive tasks, but they do not understand context or explain why an incident occurred.

Resolve AI is the only multi-agent system designed around five key pillars: knowledge, reasoning, action, learning, and collaboration, which enable it to operate as a true teammate in production.

Most organizations start with a small pilot, testing a few historical incidents or running staged experiments. These are useful first steps, especially where compliance makes direct access to production harder.

But pilots only tell part of the story. Retrieval-augmented search and single-model connectors can look good in a controlled demo. In production, with messy data and unpredictable failures, they fall apart. The actual test is whether the AI SRE adapts, reasons, and collaborates at the speed and scale of your environment.

As McKinsey noted in 2024: “Most CIOs know that pilots do not reflect real-world scenarios; that is not really the point of a pilot. Yet they often underestimate the amount of work required to make generative AI production-ready.” ² MIT Sloan reinforced this in 2025: “Only 5 percent of generative AI pilots succeed… the 95 percent lean on generic tools, slick enough for demos, brittle in workflows.” ³

A strong evaluation framework grounds itself on two important applications of an AI SRE: wartime, when live incidents put systems under stress, and peacetime, when engineers rely on the platform for day-to-day production operations. A credible AI SRE must prove it delivers in both.

The most effective path to clarity is to test in production on real incidents, both historical and those occurring during the PoC. The goal is to see how the system behaves with messy, high-variance data, novel issues, and the stressful real-world conditions engineers face. This is not a side experiment or a demo environment. Plan the evaluation into a sprint or two where on-call engineers, site reliability engineers, and application developers actually use the system during their everyday workflow. That is the only way to measure whether it reduces toil and accelerates recovery in practice.

Focus on concrete, measurable criteria:

For most organizations, a successful evaluation should show a combination of:

Incidents may define the peaks, but most of the value comes from everyday usage. An AI SRE that only performs in a crisis but sits idle the rest of the time will not transform how your engineers work. Evaluation should therefore also measure how well the system supports engineers when nothing is on fire.

Use cases can include:

The reality is that while there are common KPIs you can assess, there is no single universal metric. It depends on how your business operates, the challenges your teams face, the specific use cases you are testing, and the outcomes that matter most. The goal should always be to measure against both hard metrics, such as MTTR and SLA performance, and soft outcomes like reduced alert fatigue, faster onboarding, and more time for strategic engineering work.

The strongest systems also show transferability of learnings between wartime and peacetime. Patterns discovered during incidents should improve day-to-day operations, and routine troubleshooting should sharpen the system for the next outage. Without this cycle, you end up with a tool that may look impressive in a crisis but provides little ongoing value.

These six dimensions are how you separate systems that only look good in pilots from those that can deliver in production.

A complete evaluation should measure not only how the system performs under incident pressure, but also how it contributes to reliability and engineering velocity in everyday operations. In other words, it should prove its value both when systems break and when they are running normally.

These dimensions apply both when systems break and when they run smoothly, covering use cases from safer change rollouts and compliance checks to telemetry design and cost optimization.

Beyond technical capabilities, an AI SRE must be ready for the realities of enterprise adoption. When evaluating, look for:

Using tools like a human to investigate logs

At 03:12, error rates spike for the storage service in us-east-1. The AI SRE translates “Why are we seeing 502 errors in storage?” into tool-specific queries. Within a minute, it surfaces a cluster of “TLS certificate expired” messages from the load balancer logs, links the error onset to the exact timestamp the certificate validity ended, and highlights the certificate ID.

It then cross-checks recent infrastructure events, sees no changes to the load balancer config, and concludes the outage is due to certificate expiry rather than a deployment regression. It suggests executing the pre-approved certificate reissue workflow and verifies with the engineer before they take action.

Multi-hop reasoning under pressure

At 14:23, the payment service starts timing out. Alerts fire. A traditional investigation would start in the payment service dashboard, check error rates, then hop to logs for stack traces. If no obvious culprit appears, the engineer pivots to recent deployment history, then upstream services.

In this case, the system starts two investigations in parallel. Within 90 seconds, it correlates logs from payment-service and auth-service, sees connection timeout errors in the payment logs, and “max connections reached” errors in the database logs. It concludes that the auth-service is overwhelming the DB connection pool, causing cascading timeouts in the payment-service.

From there, it suggests two immediate safe actions: throttling auth-service requests to the DB, or rolling back auth-service to the last stable deployment. It posts the findings and options into the incident Slack channel with confidence scores, letting the on-call engineer choose the path forward.

Result: Root cause identified and remediation in progress within minutes, rather than hours.

Future-state: autonomous action within guardrails

In a not-so-distant future, imagine a new feature flag rollout causes traffic imbalance across regions. Latency spikes in one region while another remains stable. A more advanced system could identify the feature flag change, run a canary, confirm the rollback restores balance, and execute the pre-approved workflow automatically. Recovery is complete in minutes, not because the system replaced the human, but because the human defined the boundaries within which it could safely act. And now the engineer can focus on debugging the new feature flag while the system continues its intended operations.

Every organization has engineers who know the undocumented dependencies, brittle legacy services, and quirks that only surface during high-stakes incidents. Their experience is often the difference between a five-minute fix and a multi-hour outage.

A production-ready system should not replace that knowledge, but capture and scale it. It should work alongside your team as the engineer they can delegate to, reducing false positives, freeing senior engineers from repetitive toil, and giving every responder access to the same depth of context.

This is the dividing line. Incomplete systems, whether retrieval-based search, single-model connectors, or brittle automation, can look good in a demo, but they lack the completeness to handle real-world reliability. A multi-agent system, built on knowledge, reasoning, action, learning, and collaboration, and proven across the six evaluation dimensions, is what it takes to succeed in production.

The bottom line is clear: in a world where reliability is inseparable from customer trust, you cannot afford a system that only looks good on the surface. Evaluate in production, measure both business and human outcomes, and you will know whether you are evaluating another experiment or a true teammate for your production systems.

Discover why most AI approaches like LLMs or individual AI agents fail in complex production environments and how multi-agent systems enable truly AI-native engineering. Learn the architectural patterns from our Stanford presentation that help engineering teams shift from AI-assisted to AI-native workflows.

Hear AI strategies and approaches from engineering leaders at FinServ companies including Affirm, MSCI, and SoFi.

Resolve AI has launched with a $35M Seed round to automate software operations for engineers using agentic AI, reducing mean time to resolve incidents by 5x, and allowing engineers to focus on innovation by handling operational tasks autonomously.